ClenchClick: Hands-free Target Selection Methods in AR

Keyword: Hands-free Interaction, Augmented Reality, EMG Sensing

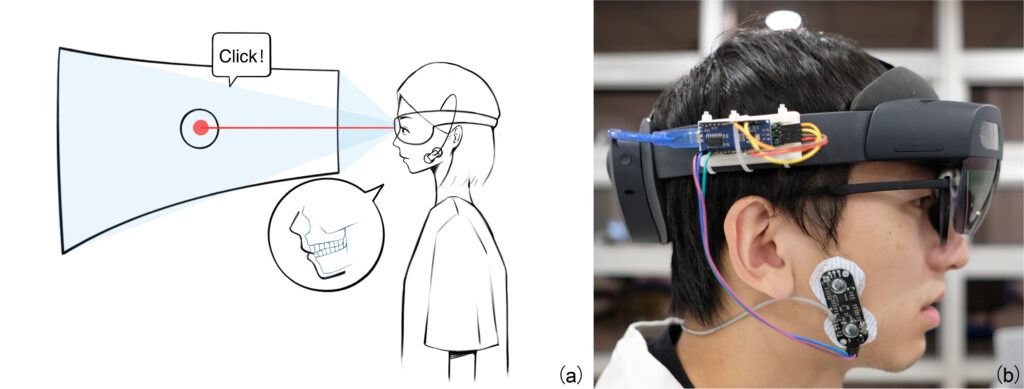

In ClenchClick, we propose to explore teeth-clenching-based target selection in Augmented Reality (AR), as the subtlety in the interaction can be beneficial to applications occupying the user’s hand or that are sensitive to social norms. We first explored and compared the potential interaction design leveraging head movements and teeth clenching in combination. We finalized the interaction to take the form of a Point-and-Click manner with clenches as the confirmation mechanism. To support the investigation, we implemented an EMG-based teeth-clenching detection system, where we adopted ML-customized thresholds for different users. We evaluated the taskload and performance of ClenchClick by comparing it with two baseline methods in target selection tasks. Results showed that ClenchClick outperformed hand gestures in workload, physical load, accuracy and speed, and outperformed dwell in work load and temporal load. Lastly, through user studies, we demonstrated the advantage of ClenchClick in real-world tasks, including efficient and accurate hands-free target selection, natural and unobtrusive interaction in public, and robust head gesture input.

Target selection is one of the most basic tasks in Augmented Reality (AR). As AR headsets continue to gain popularity as both productivity tools in the industry and as personal assistants in daily life, a variety of their usage scenarios show the need for hands-free interaction and subtle interaction. For example, professional workers on the assembly often have their hands occupied for long periods at work. In public spaces, users favor interactions that are achievable with subtle movements to avoid disturbing bystanders.

We argue that teeth clenches have the potential to serve as a hands-free and subtle confirmation method of target selection in AR to fulfill the current needs. Compared to existing methods, including dwell-based and head gesture-based selection confirmation, a clench provides explicit proprioceptive feedback of teeth collision, which strengthens the sense of control, and does not require large-scale head movements. There exists prior work leveraging different clench force levels and tongue-teeth contacts as input methods, but we found that methods to combine the usage of head movement based cursor control and teeth-clench based confirmation in target selections in AR scenarios to be under explored. Thus, we present ClenchClick, with which users control the pointer with head movement and confirm the selection by a clench when the pointer is within the target. The clench action is detected based on the EMG signals collected by the add-on sensors, which we designed a container for and attached to the headset in a compact manner. In the implementation aspect, we implemented a real-time detection system and designed a calibration phase which provided a personalized threshold for different users to improve the performance.

Supported by the detection system, we thoroughly investigated the interaction design, user experience in target selection tasks, and user performance in real-world tasks in a series of user studies. In our first user study, we explored nine potential designs and compared the three most promising designs (ClenchClick, ClenchCrossingTarget, ClenchCrossingEdge) with a hand-based (Hand Gesture) and a hands-free (Dwell) baseline in target selection tasks. ClenchClick had the best overall user experience with the lowest workload. It outperformed Hand Gesture in both physical and temporal load, and outperformed Dwell in temporal and mental load. In the second study, we evaluated the performance of ClenchClick with two detection methods (General and Personalized), in comparison with a hand-based (Hand Gesture) and a hands-free (Dwell) baseline. Results showed that ClenchClick outperformed Hand Gesture in accuracy (98.9% v.s. 89.4%), and was comparable with Dwell in accuracy and efficiency. We further investigated users’ behavioral characteristics by analyzing their cursor trajectories in the tasks, which showed that ClenchClick was a smoother target selection method. It was more psychologically friendly and occupied less of the user’s attention. Finally, we conducted user studies in three real-world tasks which supported hands-free, social-friendly, and head gesture interaction. Results revealed that ClenchClick is an efficient and accurate target selection method when both hands are occupied. It is social-friendly and satisfying when performing in public, and can serve as activation to head gestures which significantly alleviates false positive issues.