Research

MouseRing: Always-available Touchpad Interaction with IMU Rings

Xiyuan Shen, Chun Yu, Chen Liang, Xutong Wong, Yuanchun Shi, CHI2024,Honorable Mentioned Click to Browse the Paper.

Keyword: Ubiquitous computing, IMU sensing, Touch Interface, Physical Modeling

We propose MouseRing, a novel ring-shaped IMU device that enables continuous finger-sliding on unmodified physical surfaces like a touchpad. Tracking fine-grained finger movements with IMUs for continuous 2D-cursor control poses significant challenges due to limited sensing capabilities. Our work suggest that finger-motion patterns and the inherent structure of joints provide beneficial physical knowledge, which leads us to enhance motion perception accuracy by integrating physical priors into ML models. A motion dataset was created using infrared cameras, touchpads, and IMUs. We then identified several useful physical constraints, such as joint co-planarity, rigid constraints, and velocity consistency. These principles help refine the finger-tracking predictions from an RNN model. By incorporating touch state detection as a cursor movement switch, we achieved precise cursor control. In a Fitts’ Law study, MouseRing demonstrated input efficiency comparable to touchpads. In real-world applications, MouseRing ensured robust, efficient input and good usability across various surfaces and body postures.

ClenchClick: Hands-Free Target Selection Method Leveraging Teeth-Clench for Augmented Reality

Xiyuan Shen, Yukang Yan,Chun Yu, YuanchunShi, IMWUT 2022 Click to Browse the Paper.

Keyword: Hands-free Interaction, Augmented Reality, EMG Sensing

In ClenchClick, we propose to explore teeth-clenching-based target selection in Augmented Reality (AR), as the subtlety in the interaction can be beneficial to applications occupying the user’s hand or that are sensitive to social norms. We first explored and compared the potential interaction design leveraging head movements and teeth clenching in combination. We finalized the interaction to take the form of a Point-and-Click manner with clenches as the confirmation mechanism. To support the investigation, we implemented an EMG-based teeth-clenching detection system, where we adopted ML-customized thresholds for different users. We evaluated the taskload and performance of ClenchClick by comparing it with two baseline methods in target selection tasks. Results showed that ClenchClick outperformed hand gestures in workload, physical load, accuracy and speed, and outperformed dwell in work load and temporal load. Lastly, through user studies, we demonstrated the advantage of ClenchClick in real-world tasks, including efficient and accurate hands-free target selection, natural and unobtrusive interaction in public, and robust head gesture input.

Compensating Visual Hand Tracking Artifacts with Wearable Inertial Sensors

Chen Liang, Hsia Chi, Xiyuan Shen, Ying Hu, Yuntao Wang, Chun Yu, Yuanchun Shi, under review

Keyword: Multimodal Fusion, Hand Tracking, Augmented Reality

Visual hand tracking is a prevalent solution to support multi-gestural hand interaction in immersive environments. Unfortunately, current visual hand tracking systems cannot work well dealing with cases like finger obstruction or a higher demand of spatial-temporal resolution due to the inherent weakness of camera. In this paper, we delved deeper into this problem and sought to find out the opportunities of wearable inertial sensors in compensating visual hand tracking artifacts. We first conducted a systematic analysis on

visual artifacts by observing visual-inertial channel differences on a basic hand pinching dataset with both normal and edge cases, finding out 1) insufficiency in both event and hand dynamic recognition and 2) visual artifacts in terms of delay, occlusion, frequency response, and motion consistency. We proposed a FCN-based pinch recognition model and a visual-inertial filtering algorithm in optimizing both event detection and hand dynamics, achieving an optimal accuracy of 96.1% for detecting 8 pinching events. User study found our optimized hand outperforms Oculus hand tracking in terms of accuracy, realism, detail-preserving, responsiveness, lower delay, less artifacts, and overall preference.

TextOnly: A Unified Function Portal for Text-Related Functions on Smartphones

Minghao Tu, Chun Yu, Zhi Zheng, Xiyuan Shen, Yuanchun Shi, CCHI 2024

Keyword: App Recommendation, Natural Language Interface, Large Language Model

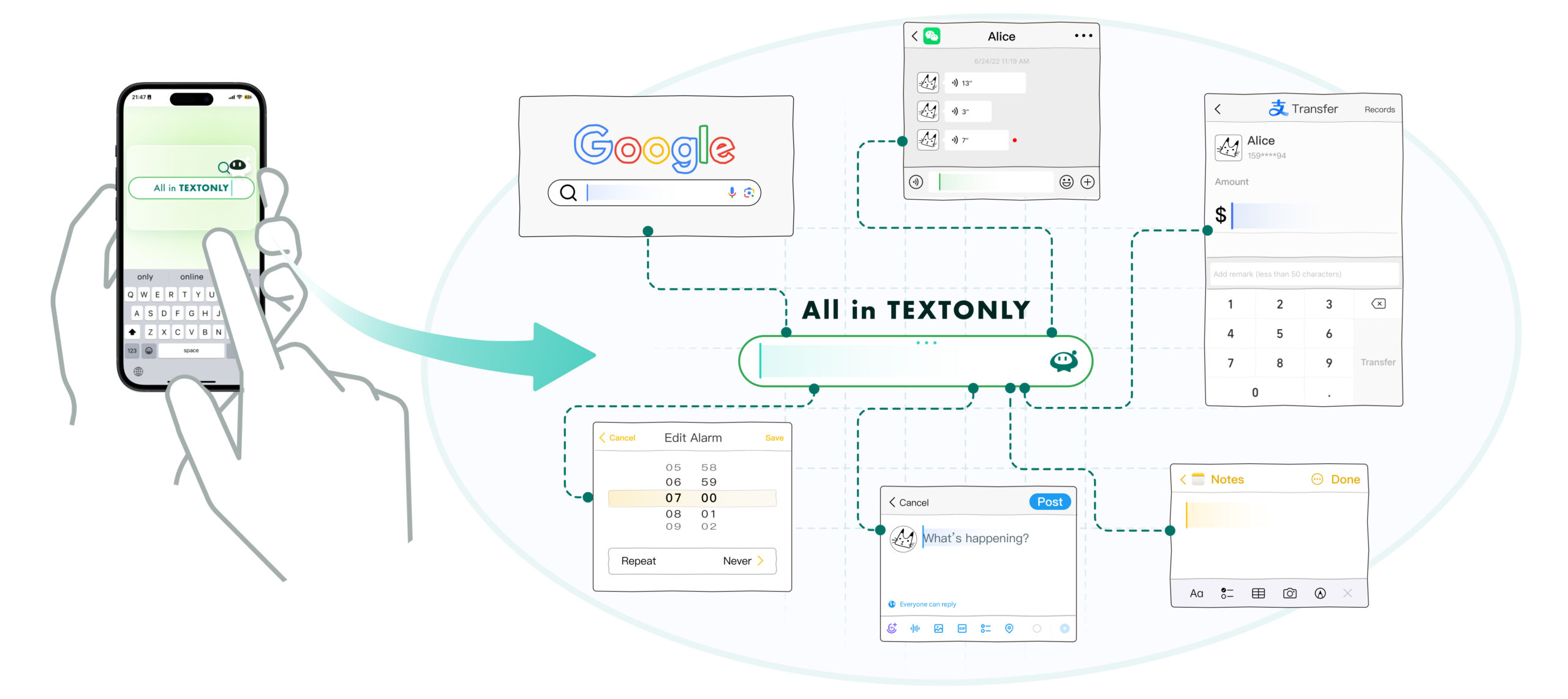

Text boxes serve as portals to diverse functionalities in today’s smartphone applications. However, when it comes to specific functionalities, users always need to navigate through multiple steps to access particular text boxes for input. We propose TextOnly, a unified function portal for text-related functions that enables users to access text-related functions from various applications by simply inputting text into a sole text box. For instance, entering a restaurant name could trigger a Google Maps search, while a greeting could initiate a conversation in WhatsApp. Despite their brevity, TextOnly maximizes the utilization of these raw text inputs, which contain rich information, to interpret user intentions effectively. TextOnly integrates

large language models (LLM) and a BERT model. The LLM consistently provides general knowledge, while the BERT model can continuously learn user-specific preferences and enable quicker predictions. Real-world user studies demonstrated TextOnly’s effectiveness with a top-1 accuracy of 71.35%, and its ability to continuously improve both its accuracy and inference speed. Participants perceived TextOnly as having satisfactory usability and expressed a preference for TextOnly over manual executions. Compared with voice assistants, TextOnly supports a greater range of text-related functions and allows for more concise inputs.

Browse my PORTFOLIO for more interesting projects!